Various ramblings in and around the IT landscape.

Come and join me as I find and comment on different topics that I find interesting.

A useful script for enabling/disabling HD LEDs in a server running TrueNAS

If you happen to have a large storage array and are running TrueNAS, this script might come in handy. It makes it easy to turn on/off the LED lights associated with the drive bays (assuming your drive bays do have them) To run it, its as simple as this

Cheat Sheet: Expand LVM volume on linux

Source: https://packetpushers.net/ubuntu-extend-your-default-lvm-space/ I didn’t have to follow all that’s in there so to keep it short, use these commands. Run this first: Now, run this second command: Done! Refresh your Cloudron dashboard and check stats page. No reboot needed.

PowerShell Script to add users to Microsoft Team Sites and Channels in bulk

Ever found your self looking for a way to add a group of users to a team site and one or more channels in bulk? I was in that situation the other day and decided to see if there was an easy way to take care of it using PowerShell. The following script is what […]

How to cleanup exchange log files using powershell

I found an excellent article here that provided a nice PowerShell script to help automate the cleanup of Exchange related log files (tested on 2013/2016/2019). I made a few modifications to add the following functonality Support for command line parameters for base paths and number of days to keep Support for WhatIf Parameter Loading of […]

Compare Microsoft Teams User List against a CSV file using PowerShell

I was looking for a why to bulk add a list of users into a Microsoft Teams Group however before I did that I wanted to compare the list of users in the team group with a list of users that I pulled from another system. With this goal in mind I pulled together the […]

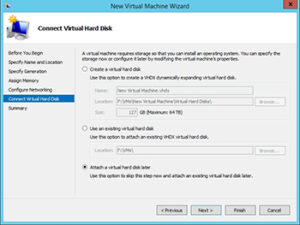

How to handle permissions for Hyper-V disks

This is a repost from this article I recently created a large number of Hyper-V virtual machines as a part of an educational video series that I am about to produce. During the process of shuffling some resources around, I discovered an odd permissions problem related to Hyper-V virtual hard disks in Windows Server 2016. […]

Categories

- Linux (14)

- Media Center (2)

- Microsoft (8)

- Mobile (3)

- Networking (9)

- Uncategorized (4)

- PDC (2)

- Blockchain (1)

- Scripting (23)

- Data Visualization (1)

- Social Networking (2)

- Development (72)

- Utilities (11)

- DNN (1)

- Virtualization (10)

- Docker (1)

- Enterprise Architecture (1)

- FreeNas (6)

- General (4)

- Home Automaton (1)

- Information (11)

Tag Cloud

active directory arr azure blockchain c# c# threading async-wait cheat-sheet docker excel freenas hardware hyper-v innovation letsencrypt linux mail network onedrive powershell scripting ssl storage teams truenas unifi